Much of what you — founders, managers and VCs — know about AI comes from POVs of engineers. At Sparrow Capital, I have been into many conversations where all I could do was just accept what I was told — capabilities of the AI, its accuracy, and among other things, how it is the next big thing. I am sure you have been in handful of those conversations as well, only to scratch your brain without any clue.

Thanks to Prof Sendhil Mullainathan`s AI class at Chicago Booth, it was only recently, that I realized how incomplete that worldview is and how little we know about understanding algorithms.

And more importantly, it is our job — as business people — to design and evaluate algorithms better.

That being said, this post is not about building an algorithm. A lot is already written about that. This post is about understanding the schematics of designing and evaluating one.

AI is a mathematical regression between input and output variable

AI applications have two algorithms:(1) training algorithm, and (2) deployment algorithm.

Training algorithm is your training data. It is nothing but a regression between input (X) and and output (Y). Essentially, the model runs a regression to understand what predictors (columns in your data) better explain Y. Engineers will tell you lot of different fancy words for this, but again, remember, at the end of the day it is just regression.

Credits: Class notes from Prof Sendhil Mullainathan`s Artificial Intelligence class at University of Chicago Booth School of Business

Then there is the deployment algorithm, which uses what you have learned on the actual deployment (real life) data. Note that this algorithm sees the (X) in real life and predicts (Y) basis the learning from training data.

It is important to understand that deployment algorithm never really sees the training data. It uses the predictor built using training algorithm. So how we train the algorithm pretty much decides how it will fare in real life.

Datafication is perhaps the most important part of AI, and your job

Okay! I did want to steer away from buzzwords but could not avoid talking about datafication. It is a good buzzword (or, not) that encompasses all things data about AI — from data acquisition to data evaluation.

You will be surprised to know that most AI applications fail (or, don't to a good job) because the data was designed poorly. Thus, remember whenever you see an AI product, your job should be to ask:

- Where is the data acquired from? Is it proprietary?

- What are input variables (X)? How many rows in the data, do they sufficiently represent the variety of inputs that the algorithm will see in the real world or deployment?

- What is the output (Y) variable? Who has labelled them? What is the credibility of people who have labelled this data?

Data acquisition becomes super critical if scope pertains to areas such as healthcare. Much of healthcare data sits across isolated hospital systems and often without a lack of common identifier — patient`s unique ID could be different in system 1 & 2. In addition, owners of data do not know how to value this data, or rather what kind of alliances to forge to leverage the same. Those templates do not exist today.

For example, building an algorithm to detect cardiovascular problems using ECG data looks simple. Intuitively, we can train on ECG records acquired from a hospital system. But, the question to be asked is that data representative of broader population in real life? What if this hospital system is particularly known to be popular among people of one ethnicity or economic group?

In addition, training data could have dispersion problems i.e. it does not have enough variety of rows when compared with deployment data.

For example, building a product to detect motorcyclist in India not wearing helmets via traffic cameras looks simple to built. We could train using image or video data. But again, our job is to ask, has the team taken into account (for X) images in rain, images in black and white, images in different kind / quality of traffic lights.

Training data must be as similar to deployment data as possible.

If that was not enough, you will see even less attention being paid to output variable (Y). Remember, we talked about AI being mathematical regression — the product does what we train it to do. If our (Y) variable in training data is labelled by someone, our product will automate or do what that “someone” does!

For example, we may want to detect hate speech or acceptance rates of candidates looking at CVs. In both of these cases, the question we must ask is who has labelled training data to be hate speech or who hired these candidates? Because we will be automating their judgement.

Even after that, there is a bigger problem here. The algorithm will detect what ‘these labellers think’ is hate speech and ‘not ground truth.’ Who gives a set of people the right to decide what is hate speech or not? Or, in case of hiring, the product mimics the behavior of employers who it wanted to help or better in the first place.

Always look under the hood to understand the design choices made by the product

Your choice of data determines the scope of the project — Automation/Prediction.

Automation is bettering human judgement while prediction is measuring ground truth.

Identifying products on a quality inspection line is automating the judgement of human that work on the inspection lines. Again, do ask is that representative of real world?

Prediction is always about ground truth. Will this customer churn or expand? Will we have rain tomorrow? Will this person have high risk of cancer? Again, having the right data (as discussed above) is very important.

Let us talk about cancer risk prediction. Trying to build an algorithm to do such a thing basis on healthcare system data might not get us what we want!

Because this training data contains what doctors thought of as high risk? What about patients that doctors missed? What about patients that are asymptomatic and did not show at the hospital? And were we not trying to build this algorithm to aid those very doctors in the first place?

You see how this problem has many data level challenges? Ground truth is missing. It wont be solved unless we aggregate data on a much broader level, have broader output variables on population that was asymptomatic, and much more.

How do we acquire this data? What will be the cost of this data? How will get across data privacy concerns? These are some of the questions that plague such problems and are often overlooked.

On the other hand, what if we could solve a smaller scope of this problem as automation? Simply, helping clinical staff detect signs (a particular spot in an X-Ray, for example) faster that help them decide the need for additional diagnosis. We are not predicting cancer here but are automating the workflow of clinical staff by image detection.

In the wake of tall claims, you must ask does the data strategy lends itself well to do a prediction. Downsize the scope of the problem to automation if required

AI projects fail because of bad design choices made by people like us

I hope you can see that there is a lot going on that could make or break an AI project. I can not emphasize enough that unlike the popular opinion, it is our job to design these systems and not of engineers.

There is a laundry list of projects that have failed including Microsoft`s Tay Chat bot, IBM Watson, Amazon`s AI recruitment system, Google`s diabetic Retinopathy Detection.

I can not for a moment believe that all these Big Tech companies lack the engineering might needed to build such systems. Yet they failed. I reckon, it is rather the lack of design thinking among business people at these companies. And perhaps, the need to launch something prematurely to play the hype-cycle.

I was amazed to further realize that sometimes you don`t even need to use AI for the problem at hand. For example, AB testing can be simple tool that can help solve problems such as increasing conversion on websites, and, increasing employee productivity, than hyping these simple problem statements under the wrapper of AI. Sometimes the cost to build these algorithms does not outweigh the intended benefits.

Avoid statistical measures while evaluating algorithms

Say the algorithm is built well, and addresses some of the concerns raised above. How do we measure it?

Stay clear of AUC, cccuracy metrics, and all other statistical metrics that are supposed to tell us how superior the algorithm is. Say that 10 more times.

For one, these metrics don` t mean anything without any context. For two, many a times, they are self evaluated. Now, these are two big and separate problems.

Any algorithm you see has been tuned to operate with a certain false positive and false negative rate. Someone somewhere has made this choice. This is called the payoff.

Accuracy can be bloated up by adding higher than required false positive rate.

While you can`t build an algorithm without a false positive rate and false negative rate, what often gets missed is the context; What is the reasoning behind such a choice?

More than their mere presence or not, we should look for the context i.e. whether someone has thought through payoff vis-a-vis the problem statement at hand. And why they made a made a particular payoff (design) choice.

Second, you will be amazed to know that most of these evaluations are self evaluations. Engineers typically keep 10–20% of the training data away as Holdout Set. The idea is to test the deployment algorithm on this Holdout Set and measure accuracy. But, as we have discussed above, if the training data itself has challenges, testing accuracy on Holdout Set means nothing.

At the same time, as business people, it is our job to evaluate the cost of false positive vs false negative — there can be situations where both are costly. This is part of our product design choices — the payoff we are willing to live with.

No algorithm in the world can be built without a payoff choice — false positive and false negative. Don`t believe people who tell you otherwise.

Best way to evaluate an algorithm is to give it real life data it has never seen

In a world, where it is increasingly easy to create hype cycles, peeling through what is going in ‘plain simple English’ becomes far more important.

This becomes even more important if you are a buyer of a AI product. You must and always ask a potential seller to test the accuracy on your data. If they say they can help predict Y, tell them, let us give you our company data (X) and why don`t you tell us Y values from your algorithm and compare them with our real Y values. Let us measure accuracy that way.

What you have done here is avoided a statistical heuristic in favor of evaluating the algorithm on a real life problem statement, that it is deigned to tackle.

In addition, the industry needs to move away from measuring AI using statistical measures in general and tie algorithms to real-time business metrics that they are designed to influence in first place.

Our model improved revenue by 2% QoQ is a better way to measure than our model is 98% accurate in predicting customers who are going to make a repeat purchase.

Lastly, even if this is built, will it be useful

Engineers will build anything just because it is cool but that does not mean it is needed. Uggh! I am prompted to write about Crypto but I will digress.

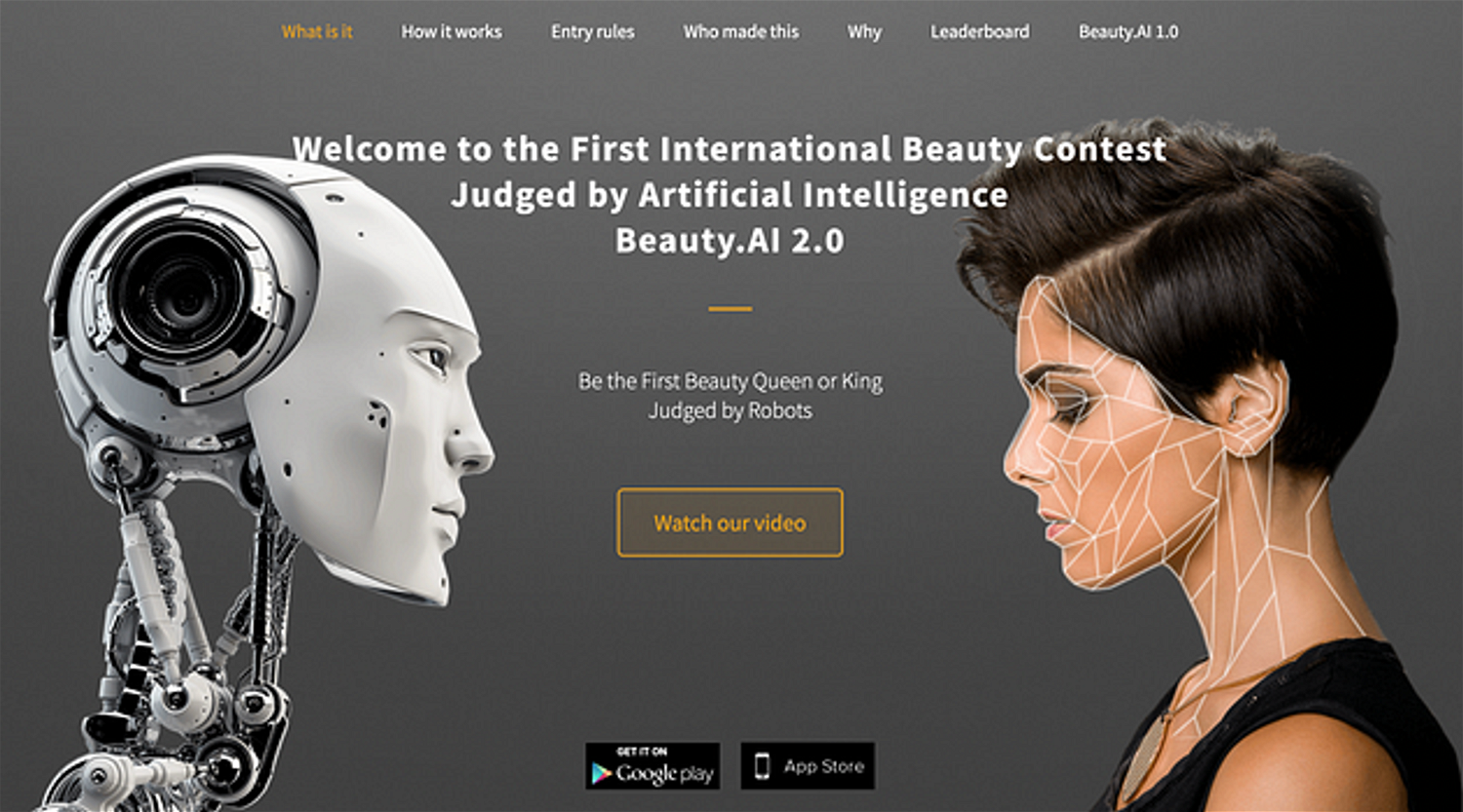

Take this example of Beauty.ai which uses AI to judge how ‘beautiful’ a person is. Of course there is a data error here. Who labelled the training data as beautiful? Perhaps, white people? because it failed to label people with darker skin beautiful.

Credits: www.beauty.ai

Keeping data error aside, but why do we need this in first place?

Without the risk of getting into AI Ethics, I will just leave this at three simple questions that must be asked for every AI project we encounter.

- What is the business use case? Who will buy it? Which identified user in the value chain is going to get identified benefits from this technology?

- If an identified user will get identified benefits, is the cost of building this technology lower than the upside?

- If #1 and #2 are true, can we sell it? Can we convince the buyer to implement necessary changes in their systems and processes to make this work?

Summing up

Remember, AI is nothing but a regression model that you are very much used to running on MS Excel, only with some added complexity.

Pun? Maybe? Maybe Not!

Let me know what you think in the comments below!

.png%3Ftable%3Dblock%26id%3D0fc05530-04e6-4e8d-bec2-ef66f2be1f03%26cache%3Dv2?width=1500&optimizer=image)

.png%3Ftable%3Dblock%26id%3Db5f686e4-3836-4e04-8011-217ebb97aa8a%26cache%3Dv2?width=1500&optimizer=image)